Ghost blogging on Azure Container Apps

Introduction

Hosting a blog these days can easily be done without having to cost anything. There are a lot of solutions in the likes of Medium, Weebly, Wix,… But for the more technology-minded people like us, who want to go the extra mile, we didn’t go for the easiest solution. We chose to run our blog on Azure Container Apps using the Ghost blogging platform.

In this post, I’ll go deeper into how the site is hosted as well as how the deployment is done at this moment, which is not yet automated.

The Software

Ghost is a well-known blogging solution in the self-hosted community. It’s available as a SAAS service over here. But you can also self-host it as a containerized application with container images being provided over at docker hub or self-built over here.

There are lots of similar frameworks like Ghost in the self-hosted space. One of the best-known ones is WordPress, it also has to be closely maintained because it has an attractive target to search vulnerabilities for. Then there are also the static code generators, which are based on Markdown (a lightweight markup language). These have the advantage of being easy to import into other tools and version via Git. However, we also wanted to be able to write articles on the move and have the ability for the users to comment. Another advantage is that Ghost is a very nice package as is, Robbe did some customization with custom Javascript and HTML but, it’s not necessary if you just want to get started!

Architecture

Now that the decision on the software has been made, the bigger question is, where do we host it? We were contemplating hosting it in an on-premises Kubernetes cluster somewhat as a challenge, but then came along a new container solution in Azure. This container platform gives us the best of all the other Azure offerings for our use case. However just to be sure let’s look at our requirements.

Requirements

I needed a solution that allows the following:

- Scalable both for performance & pricing

- Low idle pricing, if possible serverless

- No warm-up time (scaling to zero is not an option)

- Support containers as the Docker Hub compiled version will be deployed

- No support for databases is required as we will use PAAS here

Azure Container Solutions

- Azure Container Instances

- Docker Runtime in Azure App Services

- Azure Container Apps

- Azure Kubernetes Services

- Red Hat Open Shift / Service Fabric

For good measure a few sentences about why one should choose Azure Container Apps above the other solutions currently available in Azure. There are lots of articles out there that go into great detail about the numerous differences and use cases, but I’ll specify for our blog:

- NEW = COOL (technical fact)

- It has a lot of K8S features baked in, some of which are being backported to AKS native (KEDA autoscaler, Envoy proxy, versioning, …)

- Completely serverless, although can use Workload Profiles with dedicated hardware

- Scalable down to zero, but in our case, scalable to 1 to avoid a cold start

- As it is built on AKS it supports all types of containers

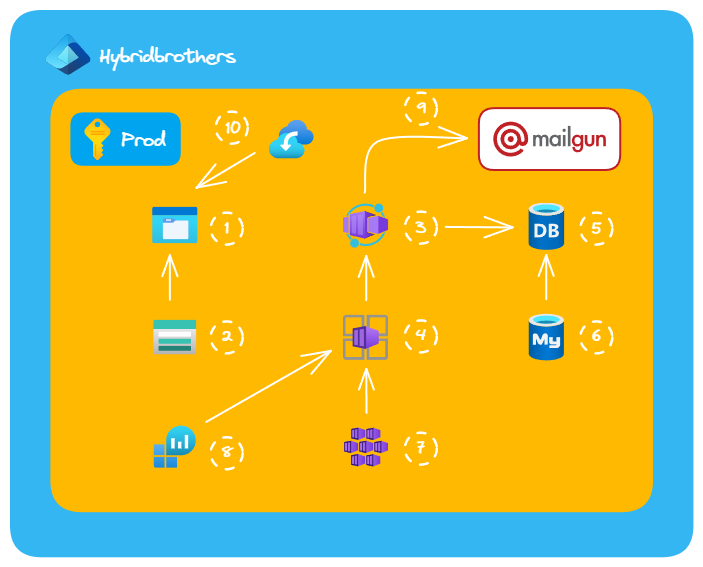

Azure Architecture

- Azure File Share

- Storage Account (General Purpose V2)

- Azure Container App

- Azure Container Apps Environment

- MySQL DB

- MySQL Server

- AKS (managed by Microsoft)

- Log Analytics for ContainerInsights

- Mailgun integration

- Recovery Services Vault

Above is a design I made which has evolved over time. There are 4 big parts in the architecture:

- Storage

- Database

- Compute

- External services

Below you’ll get a short overview on how to configure these items. As all things start, I’ve deployed everything the first time via the Azure Portal and then reverse-engineered the setup if it was tested & verified to write it in code.

Storage

The software requires some persistent storage, for mostly the static data. These are amongst others, images, themes and uploaded files shown on the blog. Since this is normally mapped to a regular folder in a docker environment, we decided to use a volume mount (which is equivalent to an AKS Persistent Volume with Azure File CSI driver but abstracted away). In this case, the simplest and most cost-effective solution was a General Purpose V2 storage account with one file share for all the content as there is no real reason to separate into multiple file shares. This is by far the easiest component of the whole setup!

Database

The database is already a lot trickier, the only officially supported database is MySQL 8.0 at this moment. However, when we started this at the end of 2022 MySQL 5.7 was still the required version. The migration proved to be a problem because between versions the collation changed, which meant, doing a database migration! The migration path required us to execute commands on the Ghost container, which we were not too keen on, so we chose the easy way and took a MySQL export, did some string replacements where the collation was defined, and hoped that we didn’t violate any of the collation rules (string lengths, etc).

The reason we also had to move to MySQL 8.0 is because of the deprecation (EOL) of MySQL 5.7 as announced by Oracle in October 2023. I will also append the script that I used to replace the collation definitions manually, but use it at your own risk!

1# Define the folder path

2$folderPath = "Path to backup folder"

3

4# Define the values to be replaced and their replacements

5$replaceValues = @{

6 "utf8mb4" = "utf8"

7 "utf8_0900_ai_ci" = "utf8_general_ci"

8}

9

10# Get all files within the folder

11$files = Get-ChildItem -Path $folderPath -File -Recurse

12

13# Iterate through each file and perform the replacements

14foreach ($file in $files) {

15 # Read the content of the file

16 $content = Get-Content -Path $file.FullName -Raw

17

18 # Replace the values

19 foreach ($replaceValue in $replaceValues.GetEnumerator()) {

20 $content = $content -replace [regex]::Escape($replaceValue.Key), $replaceValue.Value

21 }

22

23 # Write the modified content back to the file

24 Set-Content -Path $file.FullName -Value $content

25}

26

27# Output a message when the replacements are complete

28Write-Host "Value replacements complete."

As you will see in the bicep code we had to disable secure transport (SSL), sadly it was impossible to make it work although according to the documentation, it should be doable.

Compute

Considering computing we of course chose the state-of-the-art Azure Container Apps which finally after a year have the most requested features implemented like Key Vault integration, storage mounts, workload profiles and much more! For scaling we use the built-in KEDA autoscaler, configured with the default HTTP scaler, which is useful as we’re hosting a website. Something to notice is, to set your minimum amount of replicas to 1 for websites, otherwise, you’ll have a warm-up time, as by default the service scales to 0.

External mailing

Last but not least is the Ghost mailing integration. By default, Ghost advertises the integration they have with Mailgun for both login confirmation mailing and bulk newsletter mailing. However Mailgun is a paying service, and since this is a hobby project, we tried to cheap out. Connecting to Exchange Online worked fine via SMTP. However, we added the container app environment outbound IP to and SPF record and enabled DKIM but in the end, we were unable to have the verification mails sent without some of them arriving in the junk. As such we decided to finally use Mailgun. The configuration went without a hitch some things to pay attention to:

- Use the flex plan, it’s not shown on their site and has to be requested via support but has these features (credit card required)

- 1000 messages per month for free and 1$ / 1000 extra messages

- Custom domains

- 5 Routes

- Long data retention of 5 days

- Don’t use the sending API key but use a general account API key

Infrastructure as Code

As I am most proficient in Azure Bicep and our solution is hosted in Microsoft Azure Cloud we choose Bicep as our code of choice. I will be going over each module of the code and adding some annotations. In this code, private endpoint has been enabled, for our case however, they haven’t been enabled to save on costs (I know, not best of practices ?).

Network

1//--------------------

2// Parameters

3//--------------------

4param vnetConfig object

5param location string

6param environment string

7param application string

8

9//--------------------

10// Targetscope

11//--------------------

12targetScope = 'resourceGroup'

13

14//--------------------

15// Variables

16//--------------------

17

18//--------------------

19// Virtual Network

20//--------------------

21resource virtualNetwork 'Microsoft.Network/virtualNetworks@2023-06-01' = {

22 name: 'vnet-${application}-${environment}-${location}-001'

23 location: location

24 properties:{

25 addressSpace: {

26 addressPrefixes: vnetConfig.addressPrefixes

27 }

28 subnets: [for subnet in vnetConfig.subnets: {

29 name: 'snet-${subnet.name}-${environment}-${location}-001'

30 properties:{

31 addressPrefix: subnet.addressPrefix

32 }

33 }]

34 }

35}

Very simple module creating one subnet for all services, as this is a very small application. Microsoft tells us that you should use one subnet per private endpoint resource type, however, this seems like a lot of overhead for our use case (and a lot of customers as well!)

Storage

1

2//--------------------

3// Parameters

4//--------------------

5param location string

6param environment string

7param application string

8param acaConfig object

9param virtualNetworkId string?

10param subnetId string?

11

12//--------------------

13// Targetscope

14//--------------------

15targetScope = 'resourceGroup'

16

17//--------------------

18// Variables

19//--------------------

20var storageAccountFileURL = 'privatelink.file.${az.environment().suffixes.storage}'

21var privateEndpoint = (empty(virtualNetworkId) || empty(subnetId)) ? false : true

22

23//--------------------

24// Storage infra

25//--------------------

26

27// Storage account

28resource storageAccount 'Microsoft.Storage/storageAccounts@2023-01-01' = {

29 name: acaConfig.storageAccountName

30 tags: {

31 'hidden-title': 'st-${application}-${environment}-${location}-001'

32 }

33 location: location

34 sku: {

35 name: 'Standard_GRS'

36 }

37 kind: 'StorageV2'

38 properties: {

39 accessTier: 'Hot'

40 publicNetworkAccess: (privateEndpoint) ? 'Disabled' : 'Enabled'

41 minimumTlsVersion: 'TLS1_2'

42 networkAcls: {

43 bypass: 'AzureServices'

44 defaultAction: 'Allow'

45 }

46 }

47}

48

49// Storage account private endpoint

50resource privateStorageAccountEndpoint 'Microsoft.Network/privateEndpoints@2023-06-01' = if (privateEndpoint) {

51 name: 'pep-${application}storage-${environment}-${location}-001'

52 location: location

53 properties:{

54 subnet: empty(subnetId) ? null : {

55 id: subnetId

56 }

57 customNetworkInterfaceName: 'nic-${application}storage-${environment}-${location}-001'

58 privateLinkServiceConnections:[{

59 name: 'pl-file-${environment}-${location}-001'

60 properties:{

61 privateLinkServiceId: storageAccount.id

62 groupIds: [

63 'file'

64 ]

65 privateLinkServiceConnectionState: {

66 status: 'Approved'

67 description: 'Auto-Approved'

68 actionsRequired: 'None'

69 }

70 }

71 }]

72 }

73}

74

75// Storage account file private dns zone

76resource privateStorageAccountFileDNSZone 'Microsoft.Network/privateDnsZones@2020-06-01' = if (privateEndpoint) {

77 name: storageAccountFileURL

78 location: 'global'

79}

80

81// Storage account file private dns zone virtual network link

82resource privateStorageAccountFileDNSZoneLink 'Microsoft.Network/privateDnsZones/virtualNetworkLinks@2020-06-01' = if (privateEndpoint) {

83 name: 'vnl-storageaccountfile-${application}-${environment}-${location}-001'

84 location: 'global'

85 parent: privateStorageAccountFileDNSZone

86 properties:{

87 registrationEnabled: false

88 virtualNetwork: empty(virtualNetworkId) ? null : {

89 id: virtualNetworkId

90 }

91 }

92}

93

94// Storage account private dns zone group

95resource privateStorageAccountDNSZoneGroup 'Microsoft.Network/privateEndpoints/privateDnsZoneGroups@2023-06-01' = if (privateEndpoint) {

96 name: 'default'

97 parent: privateStorageAccountEndpoint

98 properties:{

99 privateDnsZoneConfigs:[

100 {

101 name: storageAccountFileURL

102 properties:{

103 privateDnsZoneId: privateStorageAccountFileDNSZone.id

104 }

105 }

106 ]

107 }

108}

109

110// File service

111resource fileService 'Microsoft.Storage/storageAccounts/fileServices@2023-01-01' = {

112 name: 'default'

113 parent: storageAccount

114 properties: {

115 shareDeleteRetentionPolicy: {

116 enabled: true

117 days: 7

118 }

119 }

120}

121// File share

122resource websiteContentShare 'Microsoft.Storage/storageAccounts/fileServices/shares@2023-01-01' = {

123 name: 'websitecontent'

124 parent: fileService

125 properties: {

126 accessTier: 'TransactionOptimized'

127 shareQuota: 5120

128 enabledProtocols: 'SMB'

129 }

130}

131var storageAccountKey = storageAccount.listKeys().keys[0].value

132output storageAccountKey string = storageAccountKey

133output storageAccountName string = storageAccount.name

134output websiteContentShareName string = websiteContentShare.name

Half of our meat and potatoes, this is storage for all the static data. This contains mostly images and some files saved in the blog articles. We are using Standard GRS , this might be overkill as our database and compute aren’t region resilient! The data is stored on a fileshare called websitecontent, which is transaction optimized. At this very moment we are at 190MB used, so the settings don’t matter too much. If required, private endpoint can be enabled via the bicepparam file.

Database

1

2//--------------------

3// Parameters

4//--------------------

5param location string

6param environment string

7param application string

8param dbConfig object

9@secure()

10param dbPassword string

11param containerAppName string

12param virtualNetworkId string?

13param subnetId string?

14

15//--------------------

16// Targetscope

17//--------------------

18targetScope = 'resourceGroup'

19

20//--------------------

21// Variables

22//--------------------

23var mysqlURL = 'privatelink.mysql.database.azure.com'

24var privateEndpoint = (empty(virtualNetworkId) || empty(subnetId)) ? false : true

25

26//--------------------

27// Database infra

28//--------------------

29

30// MySQL flexible server

31resource mySQLServer 'Microsoft.DBforMySQL/flexibleServers@2023-06-30' = {

32 name: dbConfig.serverName

33 tags:{

34 'hidden-title': 'mysql-${application}-${environment}-${location}-001'

35 }

36 location: location

37 sku: {

38 name: 'Standard_B1ms'

39 tier: 'Burstable'

40 }

41 properties:{

42 administratorLogin: dbConfig.username

43 administratorLoginPassword: dbPassword

44 highAvailability: {

45 mode: 'Disabled'

46 }

47 storage: {

48 storageSizeGB: 20

49 iops: 360

50 autoGrow: 'Enabled'

51 }

52 backup: {

53 backupRetentionDays: 7

54 geoRedundantBackup: 'Disabled'

55 }

56 network:{

57 publicNetworkAccess: (privateEndpoint) ? 'Disabled' : 'Enabled'

58 }

59 version: '8.0.21'

60 }

61}

62

63// MySQL Database

64resource mySQLDatabase 'Microsoft.DBforMySQL/flexibleServers/databases@2023-06-30' = {

65 name: dbConfig.dbname

66 parent: mySQLServer

67}

68

69// MySQL disable TLS

70resource mySQLSSLConfig 'Microsoft.DBforMySQL/flexibleServers/configurations@2023-06-30' = {

71 name: 'require_secure_transport'

72 parent: mySQLServer

73 properties:{

74 value: 'OFF'

75 source: 'user-override'

76 }

77}

78

79// Reference existing containerapp

80resource containerApp 'Microsoft.App/containerApps@2023-05-01' existing = {

81 name: containerAppName

82}

83

84// MySQL add firewall rule

85resource mySQLFirewallRule 'Microsoft.DBforMySQL/flexibleServers/firewallRules@2023-06-30' = {

86 name: 'allow-aca-outbound-ip'

87 parent: mySQLServer

88 properties:{

89 startIpAddress: containerApp.properties.outboundIpAddresses[0]

90 endIpAddress: containerApp.properties.outboundIpAddresses[0]

91 }

92}

93

94// MySQL private endpoint

95resource privateMySQLEndpoint 'Microsoft.Network/privateEndpoints@2023-06-01' = if (privateEndpoint) {

96 name: 'pep-${application}mysql-${environment}-${location}-001'

97 location: location

98 properties:{

99 subnet: empty(subnetId) ? null: {

100 id: subnetId

101 }

102 customNetworkInterfaceName: 'nic-${application}mysql-${environment}-${location}-001'

103 privateLinkServiceConnections:[{

104 name: 'pl-mysql-${environment}-${location}-001'

105 properties:{

106 privateLinkServiceId: mySQLServer.id

107 groupIds: [

108 'mysqlServer'

109 ]

110 privateLinkServiceConnectionState: {

111 status: 'Approved'

112 description: 'Auto-Approved'

113 actionsRequired: 'None'

114 }

115 }

116 }]

117 }

118}

119

120// MySQL private dns zone

121resource privateMySQLDNSZone 'Microsoft.Network/privateDnsZones@2020-06-01' = if (privateEndpoint) {

122 name: mysqlURL

123 location: 'global'

124}

125

126// MySQL private dns zone virtual network link

127resource privateMySQLDNSZoneLink 'Microsoft.Network/privateDnsZones/virtualNetworkLinks@2020-06-01' = if (privateEndpoint) {

128 name: 'vnl-mysql-${application}-${environment}-${location}-001'

129 location: 'global'

130 parent: privateMySQLDNSZone

131 properties:{

132 registrationEnabled: false

133 virtualNetwork: empty(virtualNetworkId) ? null : {

134 id: virtualNetworkId

135 }

136 }

137}

138

139// MySQL private dns zone group

140resource privateMySQLDNSZoneGroup 'Microsoft.Network/privateEndpoints/privateDnsZoneGroups@2023-06-01' = if (privateEndpoint) {

141 name: 'default'

142 parent: privateMySQLEndpoint

143 properties:{

144 privateDnsZoneConfigs:[

145 {

146 name: mysqlURL

147 properties:{

148 privateDnsZoneId: privateMySQLDNSZone.id

149 }

150 }

151 ]

152 }

153}

The database is where things get interesting, the storage account can be called a true Azure native service, but the MySQL is a “third party” database replicated in Azure as a PAAS service. As you can see we run it on a B1ms machine, which is the smallest tier available, with very low IOPS of 360 an retention of 7 days. As you can see we are now on version 8.0 of MySQL as opposed to 5.7 in the past. As with the storage solution, private endpoint is to be enabled in the bicepparam file. In this same file we also have the setting that disabled SSL connection, a great amount of time was spent, to make this work, but in the end, I couldn’t get it to work. However traffic is running over the Azure backbone network, as such, interception will be quite hard and the impact of the traffic being listened too is not that much of a concern as well. The public endpoint is protected by a firewall rule, so only the public IP of the Azure Container Apps environment is allowed.

Backup

1

2//--------------------

3// Parameters

4//--------------------

5param location string

6param environment string

7param application string

8param storageAccountName string

9param websiteContentShareName string

10param virtualNetworkId string?

11param subnetId string?

12

13//--------------------

14// Targetscope

15//--------------------

16targetScope = 'resourceGroup'

17

18//--------------------

19// Variables

20//--------------------

21var backupURL = 'privatelink.we.backup.windowsazure.com'

22var privateEndpoint = (empty(virtualNetworkId) || empty(subnetId)) ? false : true

23

24//--------------------

25// Backup infra

26//--------------------

27

28// Recovery services vault for storage acount backup

29resource recoveryServiceVault 'Microsoft.RecoveryServices/vaults@2023-06-01' = {

30 name: 'rsv-${application}-${environment}-${location}-001'

31 location: location

32 sku: {

33 name: 'RS0'

34 tier: 'Standard'

35 }

36 identity: {

37 type: 'SystemAssigned'

38 }

39 properties:{

40 publicNetworkAccess: 'Enabled'

41 securitySettings:{

42 immutabilitySettings:{

43 state: 'Disabled'

44 }

45 }

46 }

47}

48

49// Recovery services vault private endpoint

50resource privateRecoveryServiceVaultEndpoint 'Microsoft.Network/privateEndpoints@2023-06-01' = if (privateEndpoint) {

51 name: 'pep-${application}backup-${environment}-${location}-001'

52 location: location

53 properties:{

54 subnet: {

55 id: subnetId

56 }

57 customNetworkInterfaceName: 'nic-${application}backup-${environment}-${location}-001'

58 privateLinkServiceConnections:[{

59 name: 'pl-backup-${environment}-${location}-001'

60 properties:{

61 privateLinkServiceId: recoveryServiceVault.id

62 groupIds: [

63 'AzureBackup'

64 ]

65 privateLinkServiceConnectionState: {

66 status: 'Approved'

67 description: 'Auto-Approved'

68 actionsRequired: 'None'

69 }

70 }

71 }]

72 }

73}

74

75// Recovery services vault private dns zone

76resource privateRecoveryServiceVaultDNSZone 'Microsoft.Network/privateDnsZones@2020-06-01' = if (privateEndpoint) {

77 name: backupURL

78 location: 'global'

79}

80

81// Recovery services vault private dns zone virtual network link

82resource privateRecoveryServiceVaultDNSZoneLink 'Microsoft.Network/privateDnsZones/virtualNetworkLinks@2020-06-01' = if (privateEndpoint) {

83 name: 'vnl-backup-${application}-${environment}-${location}-001'

84 location: 'global'

85 parent: privateRecoveryServiceVaultDNSZone

86 properties:{

87 registrationEnabled: false

88 virtualNetwork:{

89 id: virtualNetworkId

90 }

91 }

92}

93

94// Recovery services vault private dns zone group

95resource privateRecoveryServiceVaultDNSZoneGroup 'Microsoft.Network/privateEndpoints/privateDnsZoneGroups@2023-06-01' = if (privateEndpoint) {

96 name: 'default'

97 parent: privateRecoveryServiceVaultEndpoint

98 properties:{

99 privateDnsZoneConfigs:[

100 {

101 name: backupURL

102 properties:{

103 privateDnsZoneId: privateRecoveryServiceVaultDNSZone.id

104 }

105 }

106 ]

107 }

108}

109

110// Reference existing storageaccount

111resource storageAccount 'Microsoft.Storage/storageAccounts@2023-01-01' existing = {

112 name: storageAccountName

113}

114

115// Reference existing fileshare

116resource websiteContentShare 'Microsoft.Storage/storageAccounts/fileServices@2023-01-01' existing = {

117 parent: storageAccount

118 name: websiteContentShareName

119}

120

121// Recovery services vault backup policy

122resource storageAccountBackupPolicy 'Microsoft.RecoveryServices/vaults/backupPolicies@2023-06-01' = {

123 name: 'bkpol-${application}backup-${environment}-${location}-001'

124 parent: recoveryServiceVault

125 properties:{

126 backupManagementType: 'AzureStorage'

127 workLoadType: 'AzureFileShare'

128 timeZone: 'Romance Standard Time'

129 schedulePolicy: {

130 schedulePolicyType: 'SimpleSchedulePolicy'

131 scheduleRunFrequency: 'Daily'

132 scheduleRunTimes: [

133 '2020-01-01T19:30:00.000Z'

134 ]

135 }

136 retentionPolicy: {

137 retentionPolicyType: 'LongTermRetentionPolicy'

138 dailySchedule: {

139 retentionDuration: {

140 count: 30

141 durationType: 'Days'

142 }

143 retentionTimes:[

144 '2020-01-01T19:30:00.000Z'

145 ]

146 }

147 }

148 }

149}

150

151// Recovery services vault protectionContainer

152resource storageAccountProtectionContainer 'Microsoft.RecoveryServices/vaults/backupFabrics/protectionContainers@2023-06-01' = {

153 #disable-next-line use-parent-property

154 name: '${recoveryServiceVault.name}/Azure/storagecontainer;Storage;${resourceGroup().name};${storageAccount.name}'

155 properties: {

156 backupManagementType: 'AzureStorage'

157 containerType: 'StorageContainer'

158 sourceResourceId: storageAccount.id

159 }

160}

161

162// Recovery services vault protectedItem

163resource storageAccountProtectedItem 'Microsoft.RecoveryServices/vaults/backupFabrics/protectionContainers/protectedItems@2023-06-01' = {

164 name: 'AzureFileShare;${websiteContentShare.name}'

165 parent: storageAccountProtectionContainer

166 properties: {

167 protectedItemType: 'AzureFileShareProtectedItem'

168 sourceResourceId: storageAccount.id

169 policyId: storageAccountBackupPolicy.id

170 }

171 dependsOn:[

172 recoveryServiceVault

173 ]

174}

Backup for the storage account is also included with a Recovery Services Vault, a daily backup policy with 30 days retention for the file share is enabled by default, no action required on the user side to make this work!

Container App (Environment)

1

2//--------------------

3// Parameters

4//--------------------

5param location string

6param environment string

7param application string

8param acaConfig object

9param dbConfig object

10@secure()

11param dbPassword string

12@secure()

13param mailPassword string

14param logAnalyticsWorkspaceName string

15@secure()

16param storageAccountKey string

17param storageAccountName string

18param websiteContentShareName string

19param subnetId string?

20param containerAppName string

21

22//--------------------

23// Targetscope

24//--------------------

25targetScope = 'resourceGroup'

26

27//--------------------

28// Variables

29//--------------------

30var containers = [

31 {

32 image: 'docker.io/ghost:latest'

33 name: '${environment}-${location}-ca-website'

34 env: containerVars

35 resources: {

36 cpu: 1

37 memory: '2Gi'

38 }

39 probes:containerProbes

40 volumeMounts: [

41 {

42 volumeName: 'contentmount'

43 mountPath: '/var/lib/ghost/content'

44 }

45 ]

46 }

47]

48var containerVolumes = [

49 {

50 name: 'contentmount'

51 storageType: 'AzureFile'

52 storageName: 'contentmount'

53 }

54]

55var containerProbes = [

56 {

57 type: 'Liveness'

58 failureThreshold: 3

59 httpGet: {

60 path: '/ghost/#/signin'

61 port: 2368

62 scheme: 'HTTP'

63 }

64 initialDelaySeconds: 5

65 periodSeconds: 15

66 successThreshold: 1

67 timeoutSeconds: 1

68 }

69 {

70 type: 'Readiness'

71 failureThreshold: 3

72 initialDelaySeconds: 5

73 periodSeconds: 10

74 successThreshold: 1

75 tcpSocket: {

76 port: 2368

77 }

78 timeoutSeconds: 5

79 }

80]

81var containerSecrets = [

82 {

83 name: 'database-connection-password'

84 value: dbPassword

85 }

86 {

87 name: 'mail-connection-password'

88 value: mailPassword

89 }

90]

91var containerVars = [

92 {

93 name: 'database__client'

94 value: 'mysql'

95 }

96 {

97 name: 'database__connection__host'

98 value: '${dbConfig.serverName}.mysql.database.azure.com'

99 }

100 {

101 name: 'database__connection__user'

102 value: dbConfig.username

103 }

104 {

105 name: 'database__connection__password'

106 secretRef: 'database-connection-password'

107 }

108 {

109 name: 'database__connection__database'

110 value: dbConfig.dbname

111 }

112 {

113 name: 'database__connection__port'

114 value: '3306'

115 }

116 {

117 name: 'url'

118 value: 'https://${acaConfig.url}/'

119 }

120 {

121 name: 'mail__transport'

122 value: 'SMTP'

123 }

124 {

125 name: 'mail__options__service'

126 value: 'Mailgun'

127 }

128 {

129 name: 'mail__options__host'

130 value: 'smtp.eu.mailgun.org'

131 }

132 {

133 name: 'mail__options__port'

134 value: '465'

135 }

136 {

137 name: 'mail__options__secureConnection'

138 value: 'true'

139 }

140 {

141 name: 'mail__options__auth__user'

142 value: acaConfig.smtpUserName

143 }

144 {

145 name: 'mail__options__auth__pass'

146 secretRef: 'mail-connection-password'

147 }

148 {

149 name: 'NODE_ENV'

150 value: 'production'

151 }

152 {

153 name: 'mail__from'

154 value: '[email protected]'

155 }

156]

157

158//--------------------

159// Container apps env

160//--------------------

161

162// Container Apps Environment

163resource containerAppsEnvironment 'Microsoft.App/managedEnvironments@2023-05-01' = {

164 name: 'cae-${application}-${environment}-${location}-001'

165 location: location

166 properties: {

167 infrastructureResourceGroup: 'rg-aca-${environment}-${location}-001'

168 vnetConfiguration: empty(subnetId) ? null : {

169 internal: false

170 infrastructureSubnetId: subnetId

171 }

172 appLogsConfiguration: {

173 destination: 'log-analytics'

174 logAnalyticsConfiguration: {

175 customerId: logAnalyticsWorkspace.properties.customerId

176 sharedKey: logAnalyticsWorkspace.listKeys().primarySharedKey

177 }

178 }

179 }

180}

181

182// Container App Env Storagemount for content

183resource containerAppsEnvStorageMountContent 'Microsoft.App/managedEnvironments/storages@2023-05-01' = {

184 name: 'contentmount'

185 parent: containerAppsEnvironment

186 properties: {

187 azureFile: {

188 accountName: storageAccountName

189 shareName: websiteContentShareName

190 accessMode: 'ReadWrite'

191 accountKey: storageAccountKey

192 }

193 }

194}

195

196

197// Container App Env Certificate

198resource containerAppsEnvCertificate 'Microsoft.App/managedEnvironments/managedCertificates@2023-05-01' = {

199 name: acaConfig.url

200 parent: containerAppsEnvironment

201 location: location

202 properties: {

203 domainControlValidation: 'HTTP'

204 subjectName: acaConfig.url

205 }

206}

207

208//--------------------

209// Container app

210//--------------------

211

212// Reference existing loganalyics workspace

213resource logAnalyticsWorkspace 'Microsoft.OperationalInsights/workspaces@2022-10-01' existing = {

214 name: logAnalyticsWorkspaceName

215}

216

217// Container App

218resource containerApp 'Microsoft.App/containerApps@2023-05-01' = {

219 name: containerAppName

220 location: location

221 identity: {

222 type: 'SystemAssigned'

223 }

224 properties: {

225 managedEnvironmentId: containerAppsEnvironment.id

226 configuration: {

227 secrets: containerSecrets

228 activeRevisionsMode: 'Single'

229 ingress: {

230 external: true

231 targetPort: 2368

232 transport: 'auto'

233 customDomains: [

234 {

235 certificateId: containerAppsEnvCertificate.id

236 name: acaConfig.url

237 bindingType: 'SniEnabled'

238 }

239 ]

240 allowInsecure: false

241 }

242 }

243 template: {

244 containers: containers

245 scale: {

246 minReplicas: 1

247 maxReplicas: 3

248 }

249 volumes: containerVolumes

250 }

251 }

252}

And finally the compute side, the Container App Environment and the App itself. At this moment we use the Ghost image directly from the Docker Hub registry, for production environments, a Container Registry is recommended, so as to not be dependent on the third-party registry. As you can see we group all our vars, secrets, volumes,… in a variable because it is easier if you need to change properties. First We deploy the Container Apps Environment in which we redirect logs to the Log Analytics Workspace. After that, we define the volume mount which in our case is an Azure File Share. In the background, this uses the AKS CSI driver for Azure File. Something to note is the customDomains property, here we define the SNI (Server Name Indicator) settings. This basically means you can put multiple applications behind the same ingress controller with TLS offloading done by for free by Microsoft. In the beginning we setup the blog, this feature was very unstable, however, in the last few months it has been working well! As you can see in the code for the managedCertificate, we use HTTP validation, which means when you first deploy this, you will need to add the ASUID record to your DNS zone and set an A record to the public IP of your Container Apps Environment.

Miscellaneous

1

2//--------------------

3// Parameters

4//--------------------

5param location string

6param environment string

7param application string

8//--------------------

9// Targetscope

10//--------------------

11targetScope = 'resourceGroup'

12

13//--------------------

14// Variables

15//--------------------

16

17//--------------------

18// Log Analytics

19//--------------------

20

21// Log Analytics Workspace

22resource logAnalyticsWorkspace 'Microsoft.OperationalInsights/workspaces@2022-10-01' = {

23 name: 'log-${application}-${environment}-${location}-001'

24 location: location

25 properties: {

26 sku: {

27 name: 'PerGB2018'

28 }

29 retentionInDays: 30

30 features: {

31 enableLogAccessUsingOnlyResourcePermissions: true

32 }

33 }

34}

35output logAnalyticsWorkspaceName string = logAnalyticsWorkspace.name

One of the most basic resources except for the Network Watcher, is the Log Analytics Workspace, it will receive all container logs from the Azure Container Apps Environment.

1

2//--------------------

3// Parameters

4//--------------------

5param location string = deployment().location

6param application string

7param vnetConfig object = {}

8param acaConfig object

9param dbConfig object

10param privateEndpoint bool

11param keyVaultName string

12param keyVaultResourceGroup string

13

14@allowed(['prod', 'test'])

15param environment string

16

17//--------------------

18// Targetscope

19//--------------------

20targetScope = 'subscription'

21

22//--------------------

23// Variables

24//--------------------

25var containerAppName = 'ca-${application}-${environment}-${location}-001'

26

27//--------------------

28// Basic infra

29//--------------------

30resource containerAppRG 'Microsoft.Resources/resourceGroups@2023-07-01' = {

31 name: 'rg-${application}-${environment}-${location}-001'

32 location: location

33}

34

35// Keyvault

36resource keyVault 'Microsoft.KeyVault/vaults@2023-07-01' existing = {

37 name: keyVaultName

38 scope: resourceGroup(keyVaultResourceGroup)

39}

40

41// Network Infra

42module networkInfra 'networkInfra.bicep' = if (privateEndpoint) {

43 name: 'deploy_network'

44 scope: containerAppRG

45 params: {

46 location: location

47 application: application

48 environment: environment

49 vnetConfig: vnetConfig

50 }

51}

52

53// Storage Infra

54module storageInfra 'storageInfra.bicep' = {

55 name: 'deploy_storage'

56 scope: containerAppRG

57 params: {

58 location: location

59 application: application

60 environment: environment

61 acaConfig: acaConfig

62 }

63}

64

65// Database Infra

66module databaseInfra 'databaseInfra.bicep' = {

67 name: 'deploy_database'

68 scope: containerAppRG

69 params: {

70 location: location

71 application: application

72 environment: environment

73 containerAppName: containerAppName

74 dbConfig: dbConfig

75 dbPassword: keyVault.getSecret('MySQLPassword')

76 }

77 dependsOn:[

78 containerApp

79 ]

80}

81

82// LogAnalytics Infra

83module logAnalyticsInfra 'logAnalyticsInfra.bicep' = {

84 name: 'deploy_loganalytics'

85 scope: containerAppRG

86 params: {

87 location: location

88 application: application

89 environment: environment

90 }

91}

92

93// Backup Infra

94module backupInfra 'backupInfra.bicep' = {

95 name: 'deploy_backup'

96 scope: containerAppRG

97 params: {

98 location: location

99 application: application

100 environment: environment

101 storageAccountName: storageInfra.outputs.storageAccountName

102 websiteContentShareName: storageInfra.outputs.websiteContentShareName

103 }

104 dependsOn:[

105 containerApp

106 ]

107}

108

109// ContainerApp

110module containerApp 'containerApp.bicep' = {

111 name: 'deploy_containerapp'

112 scope: containerAppRG

113 params: {

114 location: location

115 application: application

116 environment: environment

117 acaConfig: acaConfig

118 dbConfig: dbConfig

119 dbPassword: keyVault.getSecret('MySQLPassword')

120 mailPassword: keyVault.getSecret('MailPassword')

121 containerAppName: containerAppName

122 logAnalyticsWorkspaceName:logAnalyticsInfra.outputs.logAnalyticsWorkspaceName

123 storageAccountName: storageInfra.outputs.storageAccountName

124 storageAccountKey: storageInfra.outputs.storageAccountKey

125 websiteContentShareName: storageInfra.outputs.websiteContentShareName

126 }

127}

The Main file which calls all modules above, as you can see the network part is conditionally deployed as are all private endpoints in the separate modules. Our Azure Key Vault is called here to retrieve the MySQL secret and the Mailgun API secret.

1using 'main.bicep'

2

3param application = 'blog'

4param environment = 'prod'

5param acaConfig = {

6 url: 'domain.com'

7 storageAccountName: ''

8 smtpUserName: '[email protected]'

9}

10param dbConfig = {

11 serverName: 'servername'

12 username: 'username'

13 dbname: 'dbname'

14}

15param keyVaultName = 'keyVault'

16param keyVaultResourceGroup = 'keyVaultRG'

17param privateEndpoint = false

Our last file is the bicepparam file which is coupled with the main.bicep file, which contains all non-secret values, with this file we conclude the bicep code for our blog infrastructure. Lots of improvement is possible, but the budget is sadly limited, more to come on optimizations and backup!

Conclusion

This article has been a year in the making, we have had some issues in the past with MPN subscriptions being deallocated/deactivated, but in the end, all turned out well. We have been very happy about the stability of the Azure Container Apps solution, and the Ghost blogging platform as well. The image versions have been updated automatically over the past year, keeping the software up to date , without creating downtime so that’s awesome. There lots of improvements to be made, and more information to come on how to backup the storage account and database data to another subscription, but that’s for another article!